Download NATO’s broadcast-quality video content free of charge

Log in

NATO MULTIMEDIA ACCOUNT

Access NATO’s broadcast-quality video content free of charge

Check your inbox and enter verification code

You have successfully created your account

From now on you can download videos from our website

Subscribe to our newsletter

If you would also like to subscribe to the newsletter and receive our latest updates, click on the button below.

Enter the email address you registered with and we will send you a code to reset your password.

Didn't receive a code? Send new Code

The password must be at least 12 characters long, no spaces, include upper/lowercase letters, numbers and symbols.

Your password has been updated

Click the button to return to the page you were on and log in with your new password.

Executive summary

- The Data Quality (DQ) Framework is part of a compendium of data-centric governance products that enables NATO's ongoing Digital Transformation, alongside key deliverables such as the Data Strategy for the Alliance, the Data Centric Reference Architecture for the Alliance (DCRA), the Data Exploitation Framework (DEF) Strategic Plan, and the Data Management Policy.

- The DQ Framework for the Alliance complements multiple concurrent work streams including, among others, the Digital Transformation Implementation Strategy (DTIS), the Alliance Data Sharing Ecosystem (ADSE), and the Artificial Intelligence (AI) Strategy.

Background

- In 2008, the North Atlantic Council (NAC) approved the NATO Information Management Policy (NIMP), setting core principles for managing information effectively, efficiently, and securely. This was followed by the Data Management Policy (DMP), which clarified how data, when applied in context, becomes meaningful and valuable information.

- NATO’s strategic goal of achieving information superiority and data-driven decision-making at the tactical, operational, and strategic levels re-iterated the need to fully leverage data1 as a strategic asset. The NATO Data Exploitation Framework (DEF) Policy emphasized the interchangeability of data and information, while the DEF Strategic Plan outlined the Lines of Effort (LoEs) guiding data exploitation across Allies and the NATO Enterprise.

- One such LoE is the development of a NATO Data Quality (DQ) Framework to support the implementation of the DEF Policy’s principles. The Framework establishes a consistent approach to assessing and improving data quality and provides guidance for Allies, the NATO Enterprise, and Partners to curate quality data for the reliable and secure use and training of Artificial Intelligence (AI)-enabled analytical tools.

- Enhancing access to quality data across the Alliance is a key pillar of NATO’s strategy for effective data exploitation. These efforts will rely on and contribute to the ongoing initiatives towards establishing digital transformation and management capabilities, and NATO’s Digital Backbone.

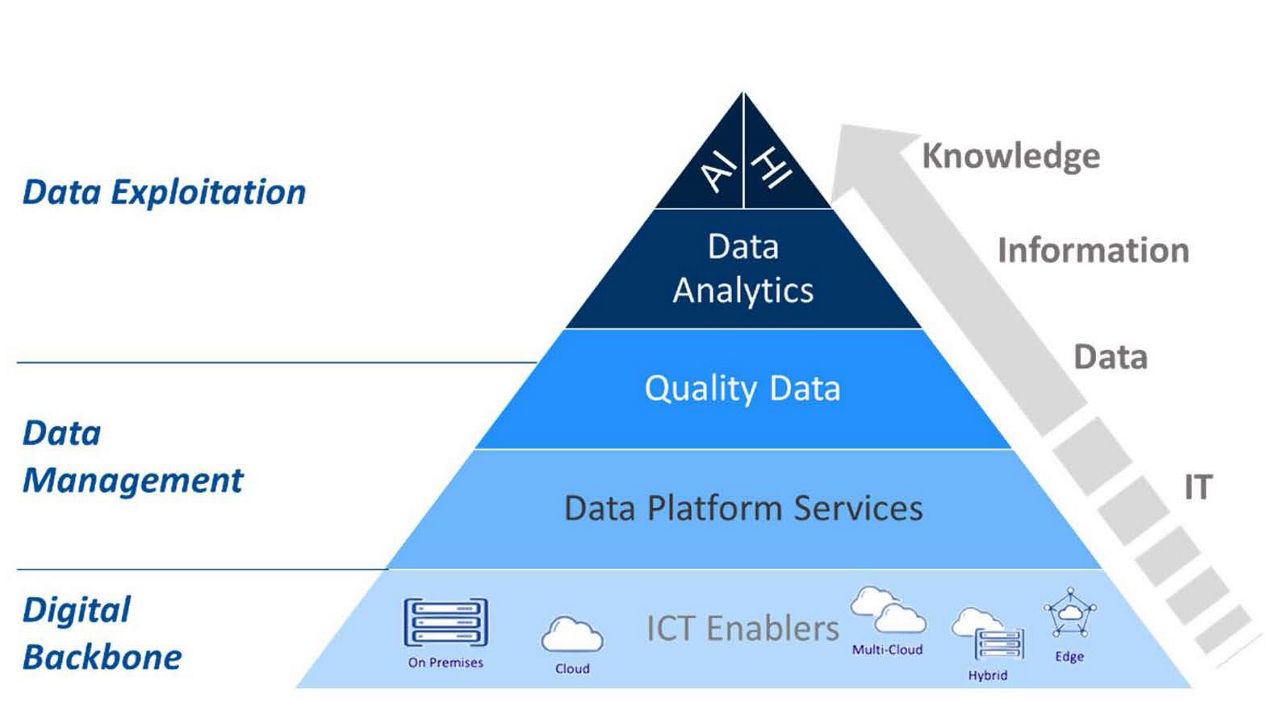

- The Alliance’s journey to becoming a data-centric organization relies on new processes and technologies, at the heart of which lies quality data (Figure 1).

Figure 1: Quality Data at the heart of data-centric organization

Aim

- The DQ Framework aims to harmonize NATO’s data-related policy landscape by establishing a shared understanding of the principles, concepts, and definitions essential for treating data as a strategic asset – collected, protected, managed, and shared effectively. It will guide collective efforts across the NATO Enterprise, Allies, and Partners to assess, manage, and improve the quality of data generated, exchanged, or exploited. The Framework provides the necessary structures and outlines key quality attributes to support these efforts, offers recommendations for updating relevant policies, directives, standards, and enablers, and defines the next steps for implementing data quality processes, practices, and procedures.

Applying the NATO Data Quality Framework

- The Framework is organized around the following elements:

- Data quality dimensions against which regular and automated assessments data quality can be made;

- Guidance on Data quality metadata to support better use of existing NATO metadata standards to communicate the quality of data assets;

- A guide to Data quality management as an iterative cycle to help organisations identify, mitigate, and remediate data quality issues at all stages of the data exploitation lifecycle.

- In support of the above, the DQ Framework provides guidance on practical tools and techniques to assess, communicate, and improve data quality throughout an organization:

- Data quality code of best practice, used to guide the implementation of data quality efforts within an organization;

- Data quality considerations for the NATO Data-Centric Reference Architecture to emphasize data integrity throughout the API lifecycle;

- Data quality considerations for Machine Learning Operations (ML Ops), to support the ongoing work for responsible AI toolkits and standards.

Dimensions and Indicators – How to measure data quality

- Data Quality Dimensions are a set of measurable attributes of data that can be individually assessed, interpreted, and improved. DQ Dimensions can be used to assess data quality and identify data quality issues along commonly understood and recognized aspects of data assets.

- The Data Quality Dimensions for the Alliance are:

- Accuracy: Degree to which data values are sufficiently representative of the real-world objects they intend to describe.

- Completeness: Degree to which all data required for a particular use is present and available for use. Components to this dimension include the rules defining the conditions under which missing values are acceptable or unacceptable, similarly determining what data is critical and what is optional.

- Consistency: Degree to which data sets and data models are consistent across instances within an organization, functional area, or community of interest. Values of data should not contradict other values representing the same entity, and should not contradict data in another data set.

- Uniqueness: Degree to which no duplication of data occurs within a dataset or within an organization. This can include duplicate entries in a single dataset, or duplicate, unsynchronized copies of a dataset within an organization.

- Timeliness: Degree to which data values are up-to-date and current, or if there are latencies and lags in reporting.

- Validity: Degree to which data conforms to predefined standards, business rules, or constraints.

- Granularity: Degree to which data has been rounder or aggregated. Describes the level of detail or precision captured by the data.

- While DQ Dimensions describe the particular characteristics of data assets, Data Quality Indicators (DQI) are the means used to measure these attributes. DQIs combine data profiling (how the data looks) with data validation (how the data should look) to measure and score data quality against the corresponding dimension.

- DQIs must be specific to the type of data being considered (e.g., numerical, categorical, imagery, geospatial) and the particular use case against with the data is being assessed to be fit-for-purpose.

- Although data quality dimensions are broadly applicable across data types and formats, DQIs must be carefully selected, benchmarked, and evaluated based on the operational environment, data lifecycle stage, and user requirements. Their selection varies according to the domain, data exploitation lifecycle, and end-user needs.

- Applying data quality dimensions and indicators enables organizations to operationalize the core principle of data quality by:

- Quantitatively and qualitatively measuring data quality;

- Setting and monitoring data quality targets for continuous improvement;

- Supporting data governance and management processes;

- Benchmarking data quality across different management solutions;

- Evaluating the quality of systems and software that generate data.

- Furthermore, data quality dimensions and indicators should align with emerging standards and requirements to ensure responsible AI adoption through relevant assessments and toolkits.

Lifecycle-based data quality evaluation

- The quality of data used for decision-making or insight extraction is influenced by factors throughout the data exploitation lifecycle, from initial collection or creation to final disposal. As data evolves from “raw” (e.g., sensor data) into “curated” datasets ready for analysis, it undergoes transformations such as fusion, cleansing, quality assurance, annotation, and other pre-processing steps. As such, evaluating data quality at each stage of this lifecycle is crucial, as quality can change due to remediation efforts, whether manual or automated.

- Key determinants of data quality are data provenance and data lineage. Data provenance refers to the process of documenting the origin, historical context, and authenticity of the data. Data lineage tracks the movement and transformation of data through systems, processes, and applications.

- Both provenance and lineage are recorded in metadata, enabling users and decision-makers to assess how much a data asset has changed from its original state and how these changes impact its suitability for use.

Data quality metadata (DQM) – Driving data quality awareness

- The inclusion of metadata about the quality of data is critical for decision- makers to adjust their decision-making processes according to the data quality level of the given data, and to help them to determine the appropriateness of the data quality level in the context of a specific task.

- Effective data quality management involves enhancing data assets with mandatory and interoperable metadata that makes the data assets and their quality understandable to decision-makers and data consumers down the line. These may include DQM fields such as:

- Assessments of intrinsic data quality (numerical or descriptive) against each of the DQ Dimensions;

- Data provenance, data lineage and data mapping;

- Other metadata related to Key Data Principles2, including discoverability, accessibility, shared-ness, security, curation & interoperability, trust.

- Along with active data quality management, the maintenance and upkeep of DQM itself will be required. Both the availability of up-to-date information on the quality of data and the management of data discovery services (e.g., data catalogues) will improve the speed and relevance of data-driven decision-making in a federated environment.

Data quality management – A problem-solving model for continuous improvement

- Data quality must be upheld by a systematic approach by which to assess, remediate, and improve data quality within an organization. This approach to data quality management will combine people, processes, and technology to meet the data quality objectives that have been committed to by an organization. This involves aiming for data that is of sufficient quality with respect to intrinsic data quality indicators and its suitability for purpose and use.

- Data quality management looks at improving the technical aspects of data quality, yet the suite of tools, techniques, and organization practices involved in DQ management must also be subject to continuous review and improvement via data culture practices, training and data literacy initiatives, and governance.

- Data quality management serves as a formal process to measure, monitor, and report on data quality levels throughout the data exploitation lifecycle.

- An effective approach to data quality management will include:

- Data quality control processes for monitoring and control of conformity of data and repositories, appropriate design of applications creating and using data, data quality risk management, systematic use of a repository for data quality problems/risks/issues, leveraging automated tools and technologies for data quality control activities;

- Data quality assurance processes for review of data quality issues of data nonconformities, provision of measurement criteria for quality levels of data, development of metrics and measurement methods, measurement of data quality and process performance levels, evaluation of measurement results, and leveraging automated tools and technology for data quality assurance activities;

- Data quality improvement processes for analysis of the root causes of data quality issues to prevent future data nonconformities, data quality remediation with the use of manual or technical means to improve data quality, improving processes as needed, reporting of results of data quality and sharing of data quality condition to related stakeholders.

- The Data Management Association (DAMA) proposes a framework for ensuring data quality in a unified cycle, which can be further adapted for NATO specific considerations, as depicted in Figure 2.

- Data quality control, assurance, remediation and improvement is executed through defined steps. Data quality is measured against standards and, if it does not meet those standards, root causes are identified and remediated if possible. Root causes may be found in any of the steps of the process, and may be technical or nontechnical. Once remediated, data should be monitored regularly to ensure it continues to meet quality requirements for the intended use.

Figure 2: Data quality management as an iterative process for continuous improvement

Technology enablers to data quality

- Automation tools can be deployed to streamline manual activities throughout the data exploitation lifecycle, thus ensuring accuracy and efficiency from data collection to its destruction. Moreover, digital tools and software can be deployed to support standardized, consistent, and optimized data quality assessment, remediation and improvement within and between organizations, entities, or functional areas, i.e. automating the processes underlying data quality management.

- Digital solutions for automating the data exploitation lifecycle refers to the application of tools, methods, and systems to improve or automate data generation, collection, processing, storage, management as well as aspects of data quality management. Examples include:

- Automated, autonomous, or connected data entry3, Real-time data ingestion (Collection);

- Master data systems, Integrated data sources, Metadata enforcement (Organization);

- Automated data value validation (Creation or Generation) or data entry controls;

- Encryption, data masking, data loss prevention (Storage and Protection);

- Removal of inactive or redundant data (Disposition).

- Digital data quality solutions refer to the processes and technologies for identifying, understanding and correcting flaws in data in support of data governance and data-driven decision-making. Examples include:

- Data profiling and scanning;

- Compliance and conformance testing;

- Automated alert and remediation workflows;

- Real-time monitoring and anomaly detection (supported by novel data- processing methods such as ML and AI).

1. Data includes NATO generated Enterprise data, national data, commercial data, and publicly available or open source data.

2. The Data Strategy for the Alliance (PO(2025)0049) outlines the following Key Data Principles to govern the design of new data-related capabilities and alignment of existing data initiatives across the Alliance: Discoverable, Accessible, Trusted, Regulated, Interoperable & Curated, Shared, and Secure.

3. The application of Internet of Things (IoT) to perform data entry at the sensor point to the military domain has the potential to reduce risk of manual error, latency in transmitting data, and enhance the effectiveness of point-to-point communications between devices, thereby improving data exploitation efforts at the tactical level.